How to Backdoor Azure Applications and Abuse Service Principals

If an attacker gains access to an Azure tenant (with sufficient permissions) they can add a “secret” or a “certificate” to an application. This will allow an attacker single-factor access to Azure allowing the attacker to persist within the client environment. Further, each application that exists within an Azure tenant has a service principal automatically assigned/created. This happens every time an application is registered within an Azure portal. A service principal account is basically an identity that’s used by applications, tools to access resources / perform automated actions.

Attackers want to target service principals because:

- Service accounts and service principals do not have MFA

- Attackers can log into Azure using a service principal account

- These accounts exist with all applications in Azure (most companies have several)

- These accounts cannot be controlled through conditional access

This blog post is going to show you how to create / register an application within an Azure portal; how to backdoor the application (aka add a “secret’) and lastly, how to detect this. I tried to make this blog post as simple as I possibly can :)

This technique was one of the techniques that I covered in my AAD/M365 attack matrix here. I’m slowly going to go through each of these techniques and cover how they work / how to detect theme.

Step 1: Malicious App Registration

If an attacker wants to backdoor an application – they need an application to target. This can either be an existing application within the portal, or the attacker can register a new application. The attack flow therefore has three parts:

- Send a phishing email to register a malicious application through an unsuspecting user

- Register an application within the Azure portal

- Find an existing application within the Azure portal to target

In this example, I have registered a new application called CatsRCute. When you register an application, it automatically tells you (as you can see on this screen), an application ID, Object ID and a tenant ID. The Object ID string is a unique value for every single application object and service principal – it basically identifies this object in Azure.

Step 2: Backdoor the application

In every application, there are two methods you are presented with for authenticating the service principal – a “secret” or a “certificate”. An attacker can add a new secret or a certificate to allow them to log into Azure – basically acting as a “backdoor”.

As you can see from the screenshot below – I added a malicious client secret which I named “EvilSecret”. The default expiration as you can see is also approximately 2 years. When this secret is created it automatically gives you a “value” which has been redacted by Azure. This “value” serves as the “password” that is used to log in for this service principal.

Of course, you are not limited to adding a client secret, you can also go the route of adding a certificate.

Step 3: Log in with your malicious secret

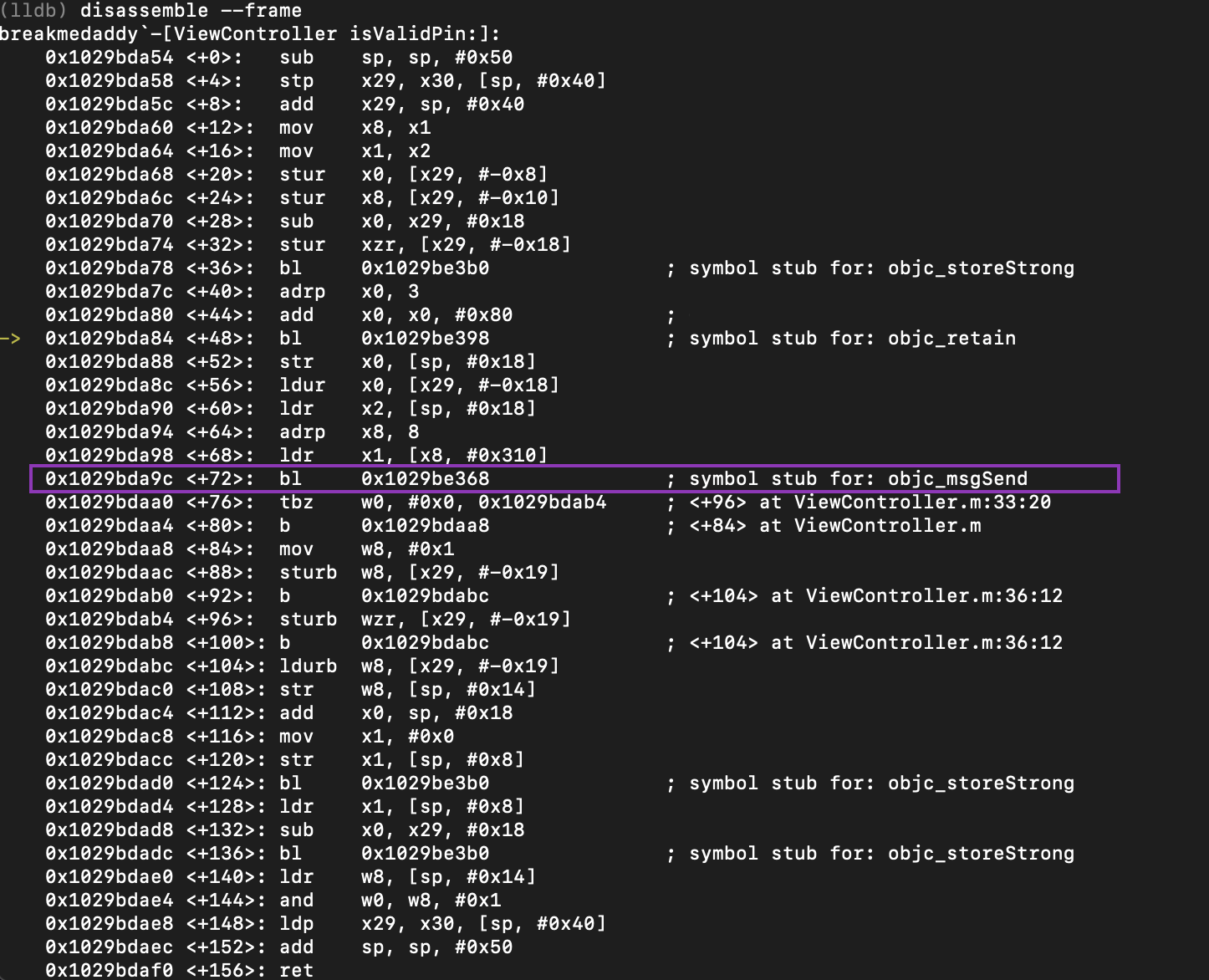

How you choose to login depends on how you want to login, but for the sake of this exercise, I opted to use Microsoft’s Azure CLI. As you can see from the screenshot excerpt from their documentation, it details exactly how to log in with a service principal.

As you can see from the screenshot, to log in via the “EvilSecret”we added to my CatsRCute application, you need a few details:

- The application ID (which is the username for the service principal). This is fetched from the overview page in the application. You can see this in the first screenshot.

- The password – this is the redacted “Value” string which is present when we registered the client secret “EvilSecret”

- The tenant associated with the application – this is also on the overview page of the application. It’s written down as “Directory (tenant) ID”.

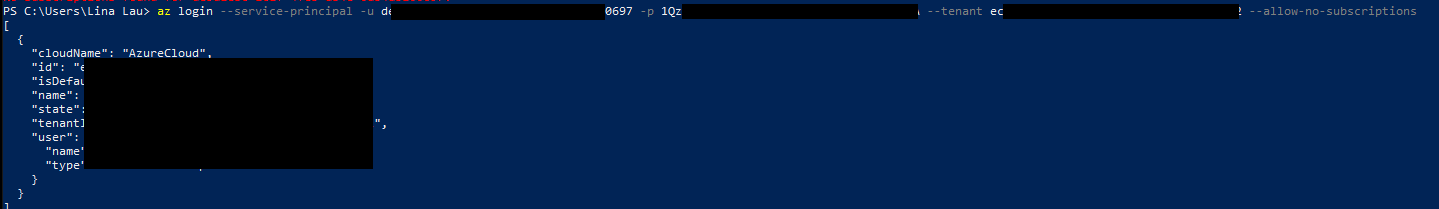

Using the Azure CLI, this is what I did to “login”.

Detection Methodology

The forensically sound methodology to help detect something like this focuses on a few elements:

- Review all application permissions that exist for all applications

- Review all service principals with secrets / certificates

- Review all sign-in logs for logons from service principals, focusing on anomalies

- Review audit logs for an action where a “secret” or “certificate” is added to an application

- Review all users that have the application-level roles assigned i.e. “Cloud Application Administrator” for the CatsRCute application (there can be more for each application)

- Review the application owner i.e. in this case I created the application with my Global Admin account (bad Lina!)

The reason you want to also look at application owners and all users with application-level roles assigned is that all of these users have control of the service principal. In this instance, as I am the owner of the application, and I have Global Admin permission – this impacts the severity of access an attacker would have in your tenant. This can basically allow an attacker to privilege escalate their way from a low privilege user to a Global Administrator.

Within the Sign-In logs for service principals, you should see this event:

Review of the audit logs for the event “Update application – Certificates and secrets management” will show when a secret/certificate is added to an application:

It’s also very important to have an audit of the application owners and people who are within the app-level role groups that exist. For example, my application had the role of “Cloud application administrator”. Within this role, I had one user assigned called “banana”. There are usually more than 1 role and more users within each of these roles, but all of these users can potentially be a part of the attack path as they have control of a service principal with global administrator rights.

This screenshot shows you who has the role of “Cloud application administrator” and this is my fake user “banana”.

Comments

Post a Comment